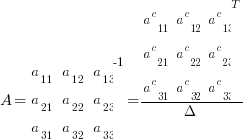

Where superscript c denotes the cofactor of the matrix element and  is the determinant of A

is the determinant of A

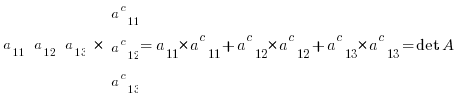

If you evaluate the row=1 , column=1 product of the original matrix with its inverse you see row=1 X column=1 results in:

……( duh! what a setup. elements times cofactors sum to determinant by definition! )

……( duh! what a setup. elements times cofactors sum to determinant by definition! )

Which is what you would expect without having introduced the scaling factor of determinant of A. In fact what happens is any main diagonal position results in the same value.

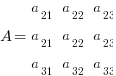

If you evaluate the row=2 column=1 product it is equivalent determinant of the following matrix:

and since it has duplicate rows the determinant will always equal zero. It is easy to see all off diagonal values will result in the same condition. Thus by this method you can rapidly see the internal workings of how and why a matrix inverse works. No more mysteries with an infinity of steps deriving the matrix inverse. Now you can can just see it all at once in your head!

0 Comments