Solution of similtaneous linear equations

- Intuitive Visual Matrices Table of Contents TOC

- Math: Area of a Parallelogram equals geometric mean of triangles

- Math: Derivation of Matrix Determinant

- Two 2 dimensional determinant of a matrix animation showing it is equal to the area of the parallelogram

- Interpretation of Matrix determinant as hyper-volume

- Intuitive Matrix Inverse

- Solution of similtaneous linear equations

- Matrices, Eigenvalues, Eigenvectors

End of TOC

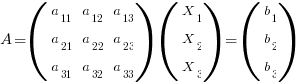

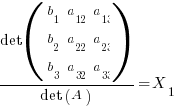

Multiplying the first column by  alters the determinant as follows:

alters the determinant as follows:

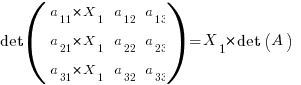

Now you can multiply the other columns and add to the first column to your hearts content as it does not alter the determinant due to linear dependency on the other rows.

-

Multiply column 2 by

and add to column 1

and add to column 1

-

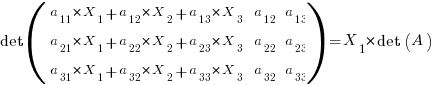

Multiply column 3 by

and add to column 1

and add to column 1

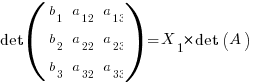

Woot! That first column now looks like the b vector! Substitute:

Dividing both sides by  yields

yields

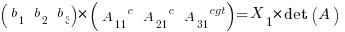

Which lends itself to the following form:

Where c denotes the cofactor of the associated matrix element

Observations

-

The solution does not depend on the direction of the

coefficients

coefficients

-

The solution is scaled by the

coefficients

coefficients

- There is alot that can be said to be "vector like". The scaled and added columns are in the same direction as the other columns. ( basis vectors ?) And thus the cross product yields zero!

- Dot product of b vector with cross product generated cofactor vector shows the solution to be in the direction of the cofactor vector scaled by the projection of b and det(A)

- Cofactor vector is orthogonal to all the other column vectors showing that only the component of b in the orthogonal direction is what counts in the solution

References

0 Comments