Maximum Entropy Distribution for Random Variable of Extent [0,Infinity] and a Mean Value Mu

Maximum Entropy Principle Table of Contents TOC

- Maximum Entropy Principle Table of Contents TOC

- Private: Derivation of the Planck Relation and Maximum Entropy Principle

- Maximum Entropy Distribution for Random Variable of Extent [0,Infinity] and a Mean Value Mu

- The Maximum Entropy Principle – The distribution with the maximum entropy is the distribution nature chooses

- Use of Maximum Entropy to explain the form of Energy States of an Electron in a Potential Well

- Langrange Multiplier Maximization Minimization Technique

- Derivation of Nyquist 4KTBR Relation using Boltzmann 1/2KT Equipartition Theorem

- Heuristic method of understanding the shapes of hydrogen atom electron orbitals

- Derivation of the Normal Gaussian distribution from physical principles – Maximum Entropy

End TOC

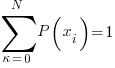

The maximum entropy constraints are as follows:

- Over the interval [0,infinity]

-

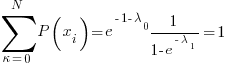

…. sum over all probabities must = 1

…. sum over all probabities must = 1

-

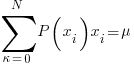

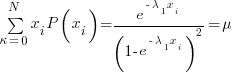

…. given an average value AKA "mean"

…. given an average value AKA "mean"

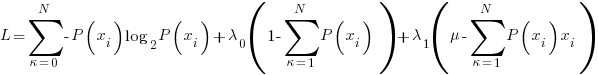

The langrangian is formed as follows:

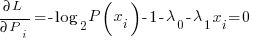

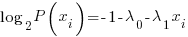

….setting equal to zero to find the extrema point

….setting equal to zero to find the extrema point

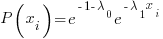

Allowing  to take up the slack to turn base 2 log into natural log:

to take up the slack to turn base 2 log into natural log:

Using the sum of probabilities =1 criteria

( See below for derivation)

( See below for derivation)

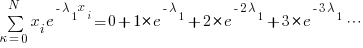

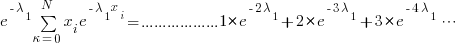

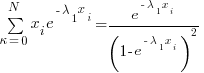

Derivation of mean value infinite sum:

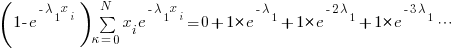

Subtracting we get the same old geometric series that we all know

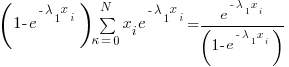

Rearranging terms:

Another way of looking at the series:

| Infinite Series Multiplication Table – The product of the 2 series is the sum of all the product entries ad infinitum | ||||

|

|

… | … | … |

|

|

|

… | … |

|

|

|

|

… |

|

|

|

|

|

|

|

|

|

|

The table uses 2 exponential series each starting with 1. In order to get the same series as the solution in the derivation above multiple the result by

It forms a sort of number wedge or number cone. I wonder if it extends to 3 dimensions?

Observations ( Need to complete this )

- ….delay like Z transform

- continuous form correspondence with discrete form

Research Links

2 Comments

Prof Von NoStrand · June 29, 2014 at 2:11 pm

I wanna see graphs and pictures and stuff!

Freemon SandleWould · June 29, 2014 at 2:13 pm

You want math porn!