The Maximum Entropy Principle – The distribution with the maximum entropy is the distribution nature chooses

Maximum Entropy Principle Table of Contents TOC

- Maximum Entropy Principle Table of Contents TOC

- Private: Derivation of the Planck Relation and Maximum Entropy Principle

- Maximum Entropy Distribution for Random Variable of Extent [0,Infinity] and a Mean Value Mu

- The Maximum Entropy Principle – The distribution with the maximum entropy is the distribution nature chooses

- Use of Maximum Entropy to explain the form of Energy States of an Electron in a Potential Well

- Langrange Multiplier Maximization Minimization Technique

- Derivation of Nyquist 4KTBR Relation using Boltzmann 1/2KT Equipartition Theorem

- Heuristic method of understanding the shapes of hydrogen atom electron orbitals

- Derivation of the Normal Gaussian distribution from physical principles – Maximum Entropy

End TOC

In a previous article entropy was defined as the expected number of bits in a binary number required to enumerate all the outcomes. This was expressed as follows:

entropy= H(x)= ![sum{kappa=1}{N}{delim{[}{-P(x_i) * log_2 P(x_i) }{]}} sum{kappa=1}{N}{delim{[}{-P(x_i) * log_2 P(x_i) }{]}}](https://www.amarketplaceofideas.com/wp-content/plugins/wpmathpub/phpmathpublisher/img/math_966_649ff0e068931805c9934d682ee17091.png)

In physics ( nature ) it is found that the probability distribution that represents a physical process is the one that has the maximum entropy given the constraints on the physical system. What are constraints? An example of a probabalistic system is a die with 6 sides. For now pretend you do not know that it is equally likely to show any 1 of the 6 faces when you roll it. Assume only that it is balanced.

In the case of a die the above summation is equivalent to the following sort of computation:

- Initial assumption set of 6 probabilities that sum up = 1 … this is a given as it has to be at least one of the 6 faces unless it stands on edge Twilight Zone style. Lets assume P(xi) = 0.05, 0.05, 0.05, 0.05,0.05, 0.75 …. you know instinctively this is not correct but demonstrates the maximum entropy principle

The total entropy given these probabilities = (.05) * (4.322) * 5 + 0.75 * (.415)= 1.0805 + .311= 1.39 bits

Let us use our common sense now. We know there are 6 equally probable states that can roll up. So its easy to calculate the number of bits required.

- Bits required = log26 = 2.585 bits

Thus we can see our initial assumption of probabilities yields an entropy number less than we would expect from common sense. How do we find the maximum entropy possible?

- Use the Langrangian maximization method.

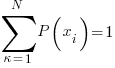

- Maximize the entropy phrase with the constraint that

…. sum over all probabities must = 1

…. sum over all probabities must = 1

The langrangian is formed as follows:

![L=sum{kappa=1}{N}{delim{[}{-P(x_i) * log_2 P(x_i) }{]}}+lambda(1-sum{kappa=1}{N}{delim{[}{P(x_i)}{]}} ) L=sum{kappa=1}{N}{delim{[}{-P(x_i) * log_2 P(x_i) }{]}}+lambda(1-sum{kappa=1}{N}{delim{[}{P(x_i)}{]}} )](https://www.amarketplaceofideas.com/wp-content/plugins/wpmathpub/phpmathpublisher/img/math_963_1a04b4762270cc395b899ec798b21cb9.png)

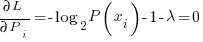

Now differentiating the langrangian and setting the derivative = 0 we can find the maximal entropic probability

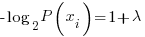

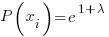

solving for the Pi yields

solving for the Pi yields

All the Pi= the same constant with the probabilities summing to 1….Thus Pi=1/6 since N=6

All the Pi= the same constant with the probabilities summing to 1….Thus Pi=1/6 since N=6

While this is alot of work to derive the obvious it there is a purpose. In the case of more complicated situations where the probability distribution is not obvious this method works. For example in the case of the Black Body emission curve of Planck. Given just the quantization of energy levels you can derive the black body curve!! This principle is woven all through nature. Learn it because it will serve you well.

Some interesting Notes to myself — myself? I meant me.

- Maximum Entropy Modeling – Local:PDF – including some open source software

0 Comments